Large Vision Models (LVMs) have transformed the field of computer vision, setting new benchmarks in image recognition, image segmentation, and object detection. Historically, convolutional neural networks (CNNs) have dominated computer vision tasks. However, with the introduction of the Transformer architecture—initially successful in Natural Language Processing (NLP)—the landscape has shifted. Transformer-based models now surpass CNNs, achieving state-of-the-art performance in computer vision tasks.

In this blog, we will explore the underlying architecture of transformer-based large vision models, offering insights into how they function. Finally, we will discuss instances where it makes sense to use transformer-based LVMs and where CNN architectures are more appropriate.

What Are Large Vision Models?

Large Vision Models (LVMs) are advanced models that tackle various visual tasks. They typically involve millions or even billions of parameters, which enables them to identify intricate visual patterns across diverse sets of images. These large models are pre-trained on large general-purpose datasets, such as ImageNet, and later fine-tuned for more specialized tasks, including object detection, segmentation, and image classification, making them useful in many real-world applications.

While previous state-of-the-art vision models relied on convolutional neural networks, many of today’s LVMs support transformer-based architectures such as ViTs, outperforming previous architectures.

LVMs can also be combined with natural language models within multimodal systems and thus solve tasks that require both vision and language understanding, such as image captioning or visual question answering. Generally speaking, LVMs are versatile, able to generalize across diverse domains, and very effective in various visual recognition tasks.

When Should I Use LVMs?

Large Vision Models excel in several different computer vision tasks compared to CNNs; however, when does it make sense to use them, and when are CNNs better suited for use? In the table below, we summarize several different aspects that need to be considered. While LVMs offer superior accuracy, CNNs remain a practical and efficient choice for many real-time applications.

|

Aspect |

LVMs (Large Vision Models) |

CNNs (Convolutional Neural Networks) |

|---|---|---|

|

Performance |

Higher accuracy and generalization but slower |

Competitive performance, faster inference, task-specific |

|

Computational Cost |

High GPU/TPU requirements, costly at scale |

More efficient, can run on mobile/edge devices |

|

Scalability |

Highly scalable with vast data and new tasks |

Scales well on fixed-size tasks, less generalizable |

|

Deployment Complexity |

Higher infrastructure cost, complex to deploy |

Mature tooling, easier optimization, and deployment |

|

Latency |

Slower inference needs optimization |

Typically faster, suited to real-time applications |

|

Interpretability |

Harder to interpret, post-hoc analysis |

More interpretable via feature/activation maps |

|

Cost |

Higher deployment and compute costs |

Generally cheaper in both training and inference |

|

Use Case Fit |

Best for multimodal, few-shot, large-scale tasks |

Suitable for specific, well-defined image tasks |

How Do Large Vision Models Work?

In this section, we will look at how Large Vision Models work by delving into the components of a Vision Transformer architecture. In 2021, Google released a paper:

An Image is Worth 16×16 Words: Transformers for Image Recognition at Scale. Before the release of this paper, transformer architecture usage was limited to the NLP domain but not applied to the computer vision domain.

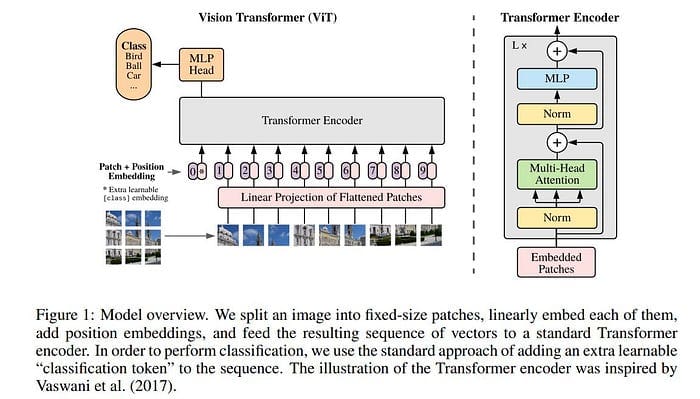

As shown in the figure below, transformer-based vision models work by first creating patches akin to tokens in NLP tasks. Then, a linear projection on the flattened patches is introduced, and positional embeddings are added. The result is fed to the Transformer architecture block, and a multi-layer perceptron (MLP) head is used for classification.

Creation of Patches

Since transformers operate on a sequence of vectors, we need some way to convert the image into a 1D sequence for the transformer to process. Creating patches also reduces computational complexity and preserves the image’s local spatial structure.

An image is split into a sequence of non-overlapping patches to accomplish this.

If the input image has dimensions (where

is height,

is width, and

is the number of channels), the image is divided into

patches

. Each patch is flattened into a vector of size

where the patch size

is set

. From this point on, each patch is treated as a “token,” akin to words in Natural Language Processing (NLP) tasks.

In the figure below, we have patches, with each patch of size

and the output of this step is as follows:

Linear Projection of Flattened Patches

A linear projection layer is a crucial step in reformatting and transforming each patch into a suitable vector representation. This layer may be useful for dimensionality reduction, ensuring the dimensions align with what the transformer model input expects and mapping to a latent space to learn better data representations.

Each flattened patch is passed through a learnable linear projection. If a patch has dimensions , it is linearly projected into a fixed dimension

, creating patch embeddings of size

. Mathematically, the projection can be represented as:

Where the

flattened patch

is the projection matrix, and

the resulting patch embedding is.

For example, let’s say we wanted to reduce the dimensionality of the flattened patch to reduce the computational complexity.

We can do this by setting , resulting in the following linear projection:

Patch + Position Embedding Creation

Positional embeddings provide spatial information that would otherwise be lost due to the sequential processing architecture of the transformer.

This step introduces spatial awareness by adding a fixed or learnable positional encoding to each patch embedding:

Where is the positional embedding for the

patch, and

is the embedding. This produces a final sequence representation

passed to the transformer blocks.

Transformer Encoder Architecture

In the next step, the transformer encoder architecture allows the model to learn complex representations of the input data while capturing global information within the image.

The process begins by inputting the position-encoded patch embeddings from the last step.

The core of this block consists of multi-head self-attention (MSA) and feed-forward network (FFN) layers, alternating with layer normalization. Self-attention allows the model to capture dependencies between patches, regardless of their spatial proximity. For a given attention head, self-attention is computed as:

Where ,

, and

are the queries, keys, and values derived from the patch embeddings? This attention mechanism captures the relations between different patches globally, followed by pointwise feed-forward layers (MLPs) to refine the representations.

MLP Head

After the Transformer encoder has processed the patch embeddings through multiple layers, a special class token, prepended in the patch sequence, serves as an aggregated image representation. This output of the class token is fed into the multi-layer perceptron head, which typically includes fully connected layers with nonlinear activations. This head of the MLP outputs scores for the final classification, allowing the model to make predictions on image recognition tasks.

Notable LVM Architectures

Now that we’ve examined the general architecture of transformer-based large vision models let’s briefly discuss some of the variants that are currently being used. These architectures achieve state-of-the-art or competitive performance across various tasks, such as image classification and object detection.

Vision Transformers (ViT)

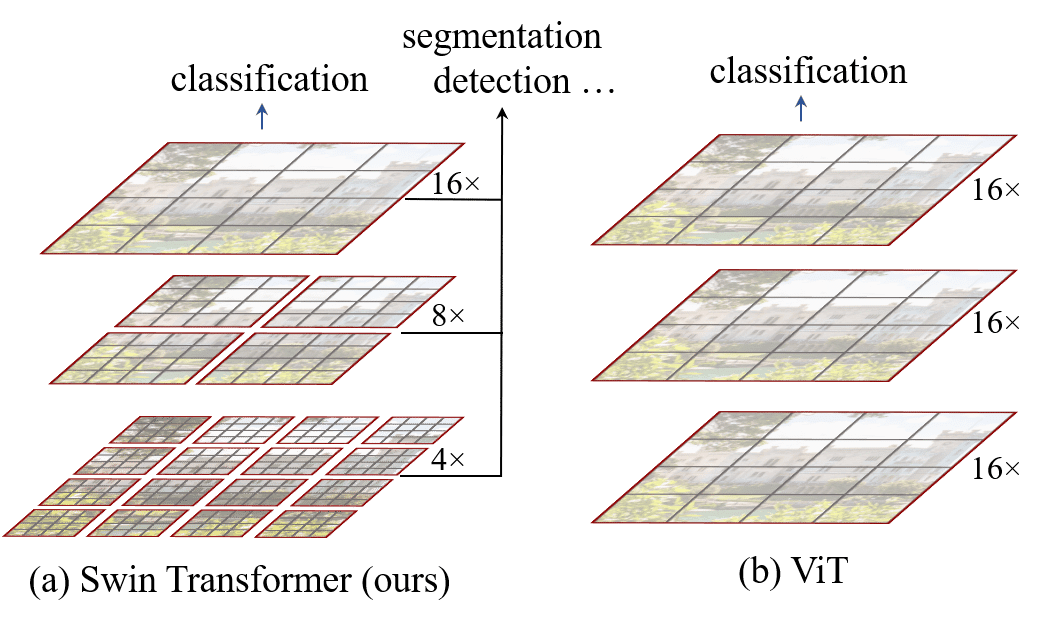

ViT is the architecture we presented in the last section. This was originally designed for natural language processing (NLP) but applied to image data. It treats images as sequences of patches (similar to words in NLP) and utilizes self-attention mechanisms to model global dependencies within an image.

Swin Transformer

The Swin Transformer builds on ViT by introducing a hierarchical architecture with shifted windows, enabling it to capture local and global information at a lower computational cost. This architecture draws parallels from a CNN by incorporating locality information and mimicking a hierarchical structure.

DETR Transformer

DETR (Detection Transformer) is a novel end-to-end object detection model that replaces traditional components (like region proposal networks) with a transformer-based architecture. It encodes images using a CNN backbone (such as ResNet), and then a transformer decoder generates the object predictions.

Conclusion

Large Vision Models have pushed the boundaries of what is possible in computer vision, offering new levels of accuracy and outperforming CNNs on several key computer vision tasks like image classification and object detection. While they demand substantial resources and come with challenges, their ability to generalize and adapt to complex tasks sets them apart from smaller models. As the field evolves, LVMs will continue to play a crucial role in the future of AI-driven visual understanding.

For organizations looking to harness this technology, phData has seen remarkably quick time-to-value with LandingAI, whose no-code platform democratizes computer vision for enterprises. Their Native Snowflake application enables organizations to deploy and manage computer vision models directly within their Snowflake environment, making advanced visual AI capabilities more accessible and practical for businesses.

If you have questions or need guidance on implementing Large Vision Models or other AI solutions, please contact our expert team at phData.