Forecasting, whether it’s sales, demand, supply, or transportation, is a business planning method that helps analysts and planners better anticipate what is going to happen in their business.

Depending on the complexity of your business operations, legacy forecasting can be within a handful of spreadsheets, in an ERP planning software (like SAP APO), or as complex as a machine learning model.

For businesses on the Snowflake Data Cloud, forecasts can be done quickly and accurately with a cutting-edge machine learning model like Facebook’s Prophet using Snowpark for Python.

A significant part of running a Consumer Packaged Goods (CPG) business is forecasting demand across products, regions, stores, etc. Being able to accurately anticipate demand is key and often the best way to do this is to train and forecast a model on each product-store segment.

In this blog, we’ll go through an example of how to use Prophet on Snowpark that demonstrates how organizations can rapidly create accurate and meaningful Consumer Packaged Goods (CPG) forecasts in Snowflake.

First, let’s cover a few concepts.

What is Facebook Prophet?

Facebook’s Prophet is a powerful and easy-to-use deep-learning forecaster. It does a great job of handling trends, seasonality, holidays, and multiple co-regressors. It does require a lot of historical and stationary data, but it’s a great way to easily produce high-quality forecasts. Most importantly, it’s supported (along with lots of other packages) in Snowpark.

What is Snowpark? Why is it Good for Machine Learning?

Snowpark brings the full power of SQL running in Snowflake and allows developers to use their favorite languages in packages. Snowpark supports several languages, and here we’ll use Python, a popular language for data scientists.

We can use Snowpark to combine data from many sources and perform any needed feature engineering for machine learning. Then we can use Snowpark UDFs to train and forecast a dedicated machine learning model for each store and product.

Making a Forecast on Snowpark for Python

In this example, we’ll be using the Store-Item Demand Forecasting Challenge Kaggle dataset. This dataset is ideal because it contains historical sales data, which is generally the strongest indicator of the future besides other factors like pricing, promotions, distribution, or macroeconomic data.

To start, let’s take a closer look at the daily total sales.

All of the data looks to be stationary, which is fantastic! Because we have the data to support it, we will build a forecast at the day-store-product level for a 90-day horizon. To see the code behind the whole solution, check out this notebook.

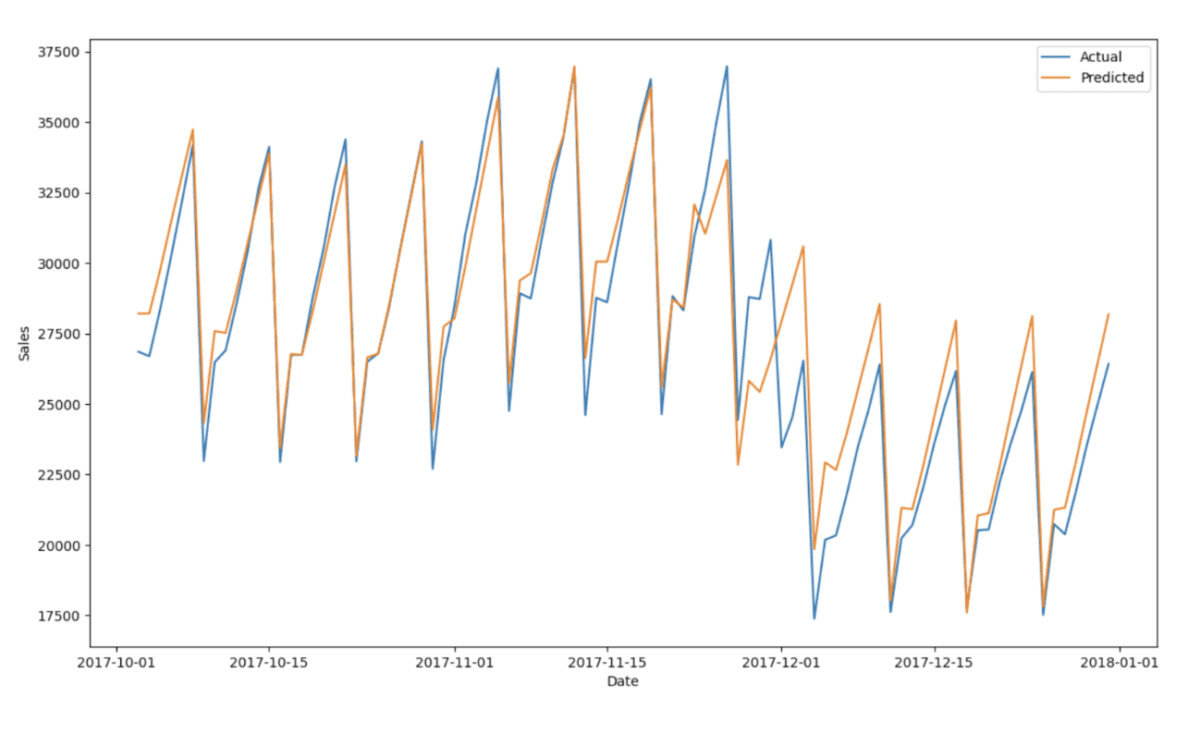

First, we’ll split the data and put the last 90 days of dates into a “test” set and the rest in a “train” set that we’ll feed to our model. This allows us to check our forecast performance on days the model hasn’t been trained on, simulating a real-world use case.

For the best results, we need to train and forecast each store-product individually. We can use Snowpark to transform the data into a table with one row per store-item pair and a column containing all of the past sales values. Then we’ll define a function to train a Prophet model and forecast 90 days into the future.

It’ll take just a few minutes to run and then we can flatten our data and compare the model’s prediction for the 90 days with the “test” data. Here we’re taking the total forecast sum for each day.

Conclusion

Snowpark allows your organization to quickly create accurate and powerful forecasts with the best machine learning tools available—all within Snowflake.

The fun doesn’t end here, we have a few more resources that might help you out on your journey. Feel free to browse our additional Snowpark resources.